How I built Irish Celtic Jewellery on a shoestring

I recently decided to take on a project of designing and implementing an e-commerce website in the jewellery space, for a non-technical client who had 20+ years domain knowledge. I worked on this project outside my day job, usually on weekends, so utilising my time efficiently was critical. This drove many of the key design and architectural decisions. In this post I’ll go through the process, the decisions I made, and why I made them.

A bit of my background

I am a full stack javascript software engineer, with a primary skill stack including Node.js, Angular 11, UX, Java, GraphQL, SQL, Apache Kafka & Docker. For the past 10+ years I’ve been working in software development in an Agile environment, and for the last 8 years I’ve been self-employed as a contractor working on both the front-end and back-end, owning critical components that house the critical data of 15+ million users. I’m the proud founder of a failed startup, which I regard as my most valuable training, as it engrained in me a “founder’s mentality” and “just get it done” attitude.

While it wasn’t a success, the process of building something from nothing is a life-changing, and career-changing, experience. To anybody who might be considering starting a company… just do it! Regardless of whether or not it succeeds (it’s likely it won’t by the way), the experience will be a tremendous credit to you and will set you apart from your peers. The more you put into it, the more it will change you. Quite literally.

The project

Utilising these core skills, I embarked on the project, the requirements from the client were very clear:

- 1. Quality

- 2. Security

- 3. Performant

- 4. On Budget (!)

TL;DR If you want to check out the finished product, the website is Irish Celtic Jewellery. It offers a wide-range of jewellery, all designed and hand-made by goldsmiths based in Ireland. It costs less than €100/month on average, including all hosting costs and all related expenses.

1. Quality

First off, there is no point in building a below standard website in the competitive jewellery retail space. Big money has been spent building impressive websites with generous marketing budgets. With such a high value low volume product, the market is very difficult to enter for a small business.

With no development budget or marketing budget to speak of, the focus instead was placed on creating a simple, well designed website. I immediately knew I would use Twitter Bootstrap as the foundation of the UI. It’s by far the best and simplest UI framework to pick up and get moving fast. It’s also opinionated, which I like, and for that reason is the perfect presentation layer to marry with Angular, my favourite ultra-opinionated UI framework.

Knowing my limits, I purchased a slick, professionally designed, e-commerce “Bootstrap” theme for about $40. I highly recommend this approach. Just be sure to check the technology versions used in the theme. For me, that meant ensuring Bootstrap v4 and SCSS was used.

Monorepo vs Polyrepo?

Now before getting stuck into the UI, I had to decide the code structure. Whether to house the UI code and backend code in the one repo… This took me longer than I thought it would. But after an aborted attempt at a monorepo, I decided to keep the Angular UI entirely separate to the backend. And I have been happy with that decision ever since. It helps me keep the front-end and back-end completely separate in my mind. There’s also no extension confusion in VS Code. The monorepo concept is probably best suited to multiple apps of the same type (i.e. UI).

The Frontend

So, after setting up a vanilla Angular 11 project, I set up the SCSS from the Bootstrap theme, and split the theme HTML into my first Angular components. (The entire website ended up consisting of 30+ components.)

Rather than re-inventing the wheel, there’s some excellent services around these days for handling payments and authentication.

So for payments I integrated with Stripe which provides best in class support for various payment methods, fraud detection and the latest Strong Customer Authentication (SCA) security standards required in Europe.

For identity authentication I chose Auth0 (recently acquired by Okta) which has probably the best overall service due to its ease of use, extensive API and excellent documentation.

The Backend

For building the backend, it was easy to choose Node.js. The speed at which APIs can be rolled out with Node.js is unrivalled in my opinion, and there’s a lot to be said for staying in the javascript ecosystem when receiving JSON payloads from front-end clients.

I chose Koa as the Node.js web server, which simplifies the endpoints by allowing use of async/await in the API middleware. (Avoid callback hell and generators whenever you can.)

For Example, this is the code for a fully functioning web server, including a single endpoint and middleware which prints the duration of requests:

const Koa = require('koa');

const app = new Koa();

app.use(async (ctx, next) => {

const start = new Date();

await next();

const ms = new Date() - start;

console.log(`${ctx.method} ${ctx.url} - ${ms}ms`);

});

app.use(ctx => { ctx.body = 'Hello Koa';});

app.listen(3000);Database

I chose a relational database as my persistence layer, and in the world of relational databases, the best option is Postgres.

Search

To support search, I chose Elastic Search, which when optimized for fast-reads (rather than fast indexing) is blazingly fast. It’s a powerful tool designed for precisely this purpose, and once configured, it just works. Supporting fuzzy search, search-as-you-type, you name it.

Cache

What about a non-persistent cache? Not everything should be writing to the database or file system. For example session context data should be quickly accessible for incoming requests. So for this purpose, I chose Redis.

Blog

Rather than paying for a hosted service, I set up my own Ghost blogging instance. Ghost is a very easy to use, modern blogging platform which I highly recommend.

Build & Deploy Tool

To efficiently build, test and deploy my code, I set up my own containerized Jenkins instance.

Architecture

So how to run all these free tools cheaply? I didn’t want to pay to use them as hosted services, so to use them for free I needed to host them myself. However to keep costs down, I intended to run them all on the one server. Clearly the best option was to pay for a Virtual Private Server (VPS). There are many of these services available nowadays, but I’ve been a happy customer of Rimu Hosting for many years so I chose them.

Next I really didn’t want to suffer the heart-ache of trying to install all these tools on the same machine, which is generally fraught with inter-dependency clashes. So I chose a containerized architectural approach. Running each component in a Docker container on the same host keeps each process isolated from the others, improving overall stability.

Overall I’ve got 9 docker containers running on a single VPS, costing €50/month, with the following resources:

- 1 single core CPU (Which is insufficient, more on this later)

- 10GB RAM

- 64GB disk

- 100GB data transfer

Docker-Compose vs Docker Swarm vs Kubernetes

While Kubernetes is the king of container orchestration, it’s too advanced for this use case. It’s ideal for larger multi-server app deployments but if you intend to run several containers on a single machine, docker compose will do the job nicely and simply.

Continuous Deployment

To speed up testing and deployment, I configured Git web hooks which get triggered whenever my front-end code or back-end code changes. This causes Jenkins to automatically execute the respective jobs, which are configured to:

- Check out the code

- Execute all unit tests

- Build production-ready code

- Deploy to the test environment and execute integration tests and End-to-End (e2e) tests.

- Deploy to production and execute integration tests and End-to-End (e2e) tests.

Logging and Metrics via The Elk Stack (plus Beats!)

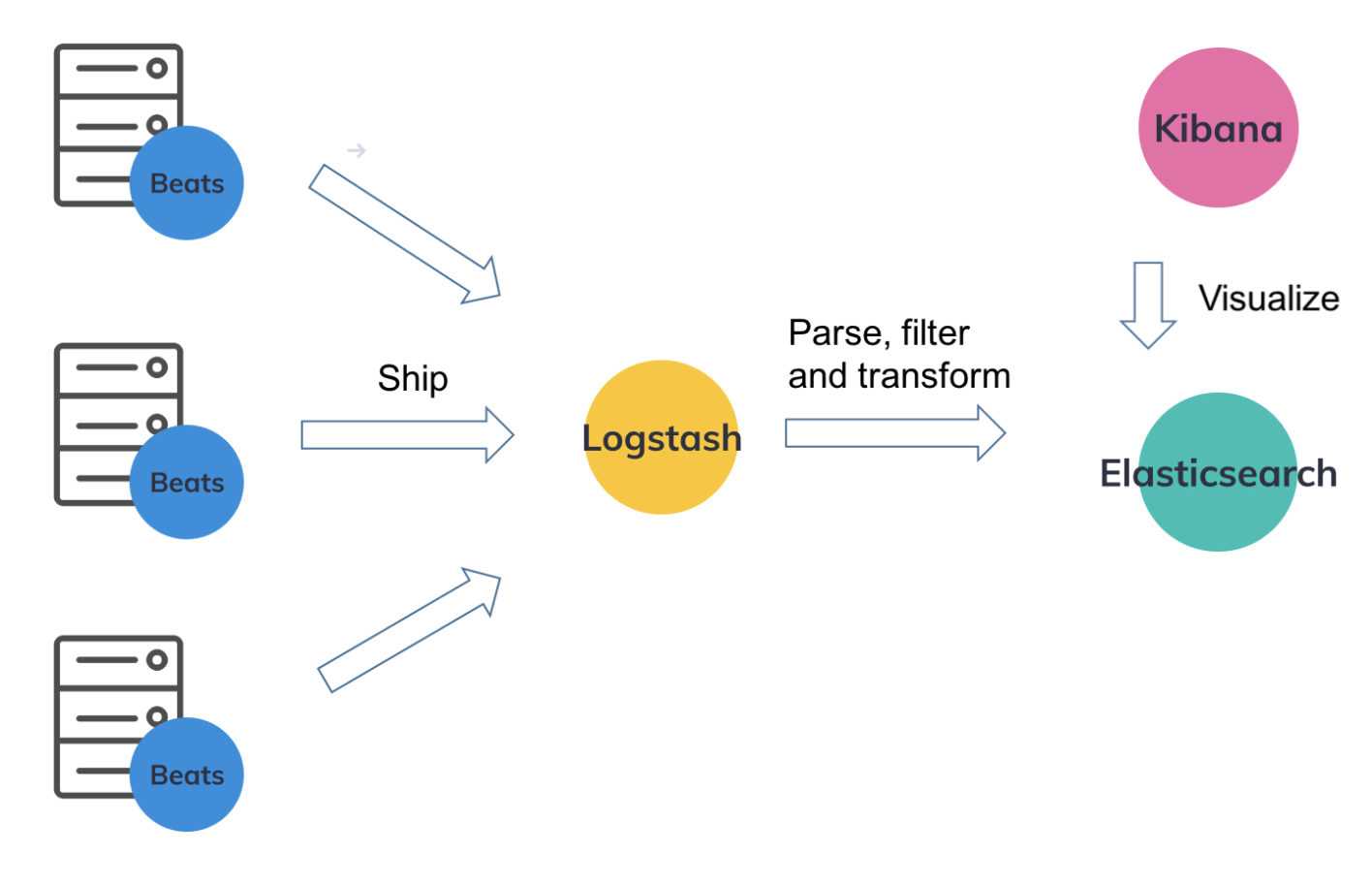

To monitor all the moving parts, I’d recommend setting up Kibana and Logstash, which together with ElasticSearch are known as The ELK Stack. The simplest way to do this is via 3 docker containers, and with a little configuration will solve all your logging and metrics monitoring needs.

Logs from all your key applications, and other important services (i.e. web servers) in the many docker containers can be gathered by Filebeat and shipped to Logstash, which then parses those logs, filters them, transforms them if necessary, and indexes them in ElasticSearch where they are searchable and viewable via Kibana.

The same applies for server metrics, which can be shipped to Logstash by a sister app of Filebeat called (you guessed it) Metricbeat.

You will then be able to configure nice looking dashboards in Kibana which display all your server metrics, including CPU/Memory usage per docker container.

Datadog

The premium alternative to this setup is Datadog, which has expanded its capabilities over the years such that it is now a one-stop shop for all your monitoring needs. However their free offering is very limited and it quickly gets expensive. Also it’s not possible to host Datadog yourself, it can only be used by setting up agents locally which ship logs and metrics to the hosted Datadog service. Maybe on the next project…

Testing

I didn’t forget about testing. :) The most important aspect of ensuring quality is surely having good testing processes and methodologies in place. To this end, I’ve got 3 kinds of tests:

Unit tests

Great at catching regressions quickly and giving confidence to code changes prior to deployment.

For Angular frontends, the standard unit test runner is Karma.

On the Node.js backend, I prefer to use mocha, especially since it now supports parallel tests which greatly speeds things up when you’ve got many tests.

E2E tests

Great at ensuring key user scenarios, which may touch several components, are actually still functional after a deployment.

On the Node.js backend, I like to use Ava which is a modern minimalist parallel test runner.

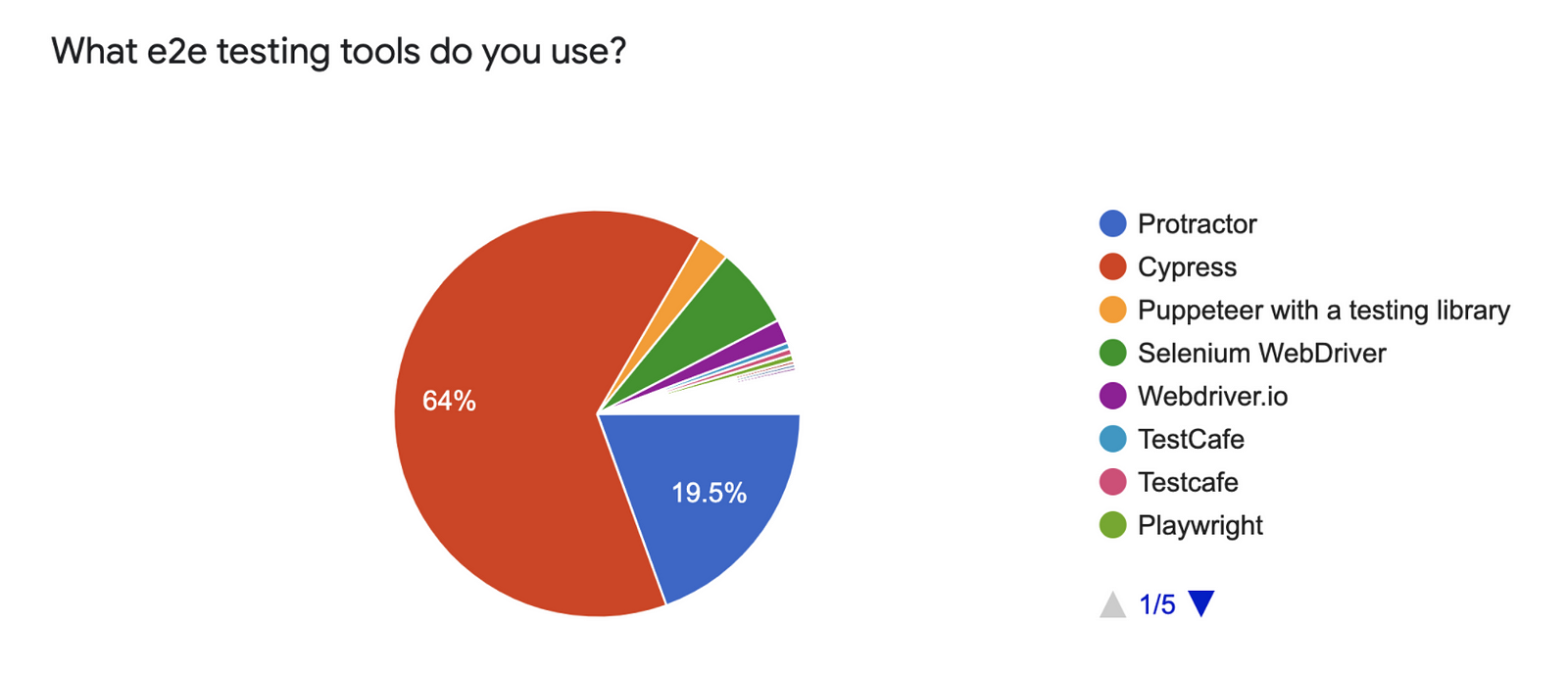

As for the Angular frontend, the standard way to test E2E has always been Protractor… however the Google team behind Protractor recently announced that Protractor will be officially deprecated in May 2021. So it’s unknown which testing framework will step up as the replacement. My money is on WebDriverIO which I’ve heard lots of good things about.

Performance Tests

There’s no load testing tool that reigns supreme at the moment. Two I’ve used a lot are Gatling and Artillery.

Gatling — Tests are written in Scala and execute in a JVM. If that is acceptable to you then perhaps choose it. Of all tools I’ve tried Gatling generates by far the best reports (which utilise Highcharts)

Artillery — Runs in Node.js, tests can be coded in javascript and YML, and it avoids the need to use a JVM and Scala. It’s simple and fast to write tests. However the visual reports it generates are poor. Years later and there has been no improvement so I would not recommend investing in it. Also, when comparing the performance of Artillery to other testing tools, it rates very poorly. Artillery executes requests 10x slower than Gatling.

I’ve heard good things about Hey, Wrk, and Vegeta, but have no experience with them yet.

Summary

The tools used to create this e-commerce website ended up being:

- UI/UX: Twitter Bootstrap HTML & SCSS theme

- Frontend Logic: Angular 11

- Backend Logic: Node.js

- Search Engine: Elastic Search

- Cache: Redis

- Database: Postgres

- Containers: Docker

- Orchestration: Docker Compose

- Continuous Delivery: Jenkins

Overall I’ve got 9 docker containers running on a single VPS, costing €50/month.

2. Security

A major feather in the security cap of the website is the fact that no sensitive information is ever sent to the backend.

- Customers passwords are only sent to Auth0.

- Sensitive credit card information is only sent to Stripe.

Authentication

Once users are authenticated via Auth0, their authenticated session is verified by the website backend which receives a JSON Web Token (JWT) in each authenticated API request, the industry standard in security tokens.

Authorization rules are then applied to ensure that the user can only access their data (for example their order history, or the status of a recent order) and nobody else’s data.

Payments

Sensitive credit card information and billing details are sent directly from the customer’s browser to Stripe, which is one of the largest and most secure payment platforms in the world.

Stripe provides best in class support for various payment methods, fraud detection and the latest Strong Customer Authentication (SCA) security standards required in Europe

Once a payment has been completed successfully, the jewellery website is notified by Stripe via the Payment Intent API.

3. Performant

Good performance was a requirement from the very beginning. Rather than cheating good design by beefing up the server resources, I tackled some key areas:

WEBP Image Format

Image size can be a major performance bottleneck, but there is a new image format that is thankfully widely supported these days called “webp”. This format was developed by google, can support both lossy and lossless compression, and converted images regularly see size reduction of 80% or more. Adopting this wonderful new image format has been a bit tricky however, as support for it has been conspicuously absent from Safari… that is, until September 2020 when Apple finally got their finger out.

Image CDN

There is great benefit to be gained by using an Image Content Delivery Network (CDN). These deliver cached copies of your images to clients without sending the request to your web server.

I found Image Kit to be an excellent CDN service which also provides automatic conversion to WEBP format in multiple dimensions. This also meant I could support responsive images via the new srcset attribute of the img tag, and deliver the best-fitting image optimized for the client’s viewport. Hit three birds with one stone? Yes please!

Minification and Tree-shaking

Angular 11 has excellent build tooling, which results in surprisingly small minified javascript and CSS bundles. It achieves this by first removing large chunks of unused javascript (“tree-shaking”), mainly from imported libraries, and then by minifying the remaining code.

Tree-shaking and minification reduces:

- the javascript bundle size from 8 MB to 1.8 MB.

- the CSS bundle size from 281 KB to 242 KB.

Asset Compression

I configured the Nginx web server to compress all static content delivered by the website. With just a few lines of code in the Nginx configuration file, all static files are gzipped before being transmitted from the web server to the client’s browser which then unzips them automatically.

Asset Compression further reduces:

- the javascript bundle size from 1.8 MB to just 273 KB

- the CSS bundle size from 242 KB to 46 KB

The total size of a typical uncached gallery page, including all JS, CSS and HTML assets, as well as the WEBP images, is approx 750KB.

Lighthouse

An excellent tool for testing the quality of your webpages is Lighthouse, available in Chrome Dev Tools. Designed by Google, it’s actually the engine behind Google’s PageSpeed Insights. It can particularly shed light on issues in the following areas:

- Performance

Together with SEO, performance is probably the most important success indicator. Lighthouse provides several key metrics, such as First Contentful Paint (FCP), Largest Contentful Paint (LCP) and Time To Interactive (TTI). - Best Practices

Helpful advice on things which don’t follow best practices. - Search Engine Optimization (SEO)

Extremely useful in detecting common pitfalls which can harm a page’s ranking. More about SEO below. - Accessibility (a11y)

No longer to be considered an afterthought, making your website accessible from Day 1 is highly recommended as it is a lot easier than retrofitting a11y later on.

SEO

A key part of performance, and something which cannot be overlooked these days, is SEO. Marketing and SEO are critical to the success of an e-commerce website. However in my case there is zero budget for marketing, therefore an even greater focus upon SEO is required.

Since I had already decided to build the frontend using Angular, it was very important to utilize Angular’s Server-Side Rendering (SSR) framework: Angular Universal. The main benefit of SSR is greatly improved SEO. Pages are rendered on the server, prior to sending the HTML to the client, so that the first HTML sent can be prefilled with content.

An SEO strategy can be split into 2 components: On-Page SEO and Off-Page SEO.

- On-Page SEO focuses on ensuring that the website code is error free, fast, has all the standard optimizations in place, e.g.

titletags,metatags,altattributes on images, easily followableatags, a singleh1on each page, good content with minimal duplication, etc. There is a long list of optimizations that can be made, which will be covered in more detail in a separate post. Google Page Insights is your friend here. - The second, and more laborious, component of SEO is Off-Page SEO, which is just another way of saying link building. Gone are the days when you could pay a dodgy service to generate hundreds of low quality links, or spam comments on blogs and forums. Google has improved greatly at detecting spammy low quality links and punishes a website if they suspect the administrator has participated in “black hat” link building methods.

The best way to generate links is to build a vibrant active social media presence, and to write content rich posts on websites with high “Domain Rating” (DR). A very useful tool for helping monitor your link building progress is Ahrefs. It’s a bit on the expensive side for a small company just getting started (€100/month) but worth subscribing to, at least for a month once in a while, to monitor your backlinks and search ranking for particular keywords. Site optimizations and backlink creation can take a lot of time before a noticeable effect is observed. Typically you will not see an impact for 6 months, so don’t lose heart. It requires dedication and perseverance.

4. On Budget

The budget for this project was very limited. The ask was to keep the expenses to approx €100/month. We actually achieved this, and I’ll tell you how.

Firstly, this kind of budget is only possible if you’re building a website yourself, and don’t count the cost of your own time. That key point aside, the costs were kept to the following:

Server Hosting

The main cost was, expectedly, the hosting charges. As I already mentioned, to keep costs down, I intended to run all the necessary components in docker containers on a single VPS with Rimu Hosting.

However, since I am running 9 docker containers, I do notice a degradation in performance. I put this down to the single core CPU. I asked Rimu Hosting about adding extra cores and they kindly gave me a second dedicated core free of charge!

This resulted in a monthly hosting cost of only €60/month for the following:

- 2 core CPU

- 10GB SSD RAM

- 64GB SSD storage

- 100GB Data Transfer Allowance

What other expenses are there?

- Domain renewal (€25/year)

- SEO analysis via Ahrefs (€100/month). I recommend paying for this service for a single month, once every 6 months, which costs €200/year. This equates to a cost of €16/month.

- “What about SSL Certs?” I hear you ask… Fortunately paying for SSL Certs is now a thing of the past. With the arrival of LetsEncrypt which allows you to generate your own free wildcard SSL Cert every 3 months and even provides a tool to automatically renew them. Just stick it in a cronjob, set it to run nightly, and forget about it. When your certs are coming up for renewal it’ll take care of it for you.

- Auth0: Free for small companies.

- Stripe: Zero monthly fee + approx 2% of each sale.

So all-in, the total monthly cost is €78, and with some headroom in the budget for potentially boosting server resources as traffic volumes increase over time. Not bad at all!

Conclusion

I hope you found this post helpful or useful in some way. I wanted to show that it is possible to build a fully operational quality e-commerce website from End-to-End, within a very modest budget.

If you’d like to read more, please go ahead and follow me. I’ll be writing several spin-off articles, diving deeper into some of the many topics raised in this post.

Happy coding!